1. Executive Summary

The age of personal AI agents has arrived. Hundreds of thousands of users worldwide are adopting autonomous AI systems that act on their behalf: booking flights, managing calendars, reading emails, executing code, and automating daily workflows. The demand is undeniable. But the industry has a fundamental problem: every existing AI agent operates without verifiable identity, without cryptographic permission scoping, and without architectural separation between data and control planes. The result is a class of software that is powerful, useful, and deeply unsafe.

The security failures of early AI agents are not bugs to be patched. They are architectural. When an AI agent is built as a high-privilege service account manipulable via natural language, with no firewall between data processing and action execution, prompt injection attacks can trigger unauthorized data access, credential theft, and financial transactions. Independent security research has documented real-world attacks including cryptocurrency wallet drainage, silent data exfiltration through third-party skills, and remote code execution through indirect prompt injection embedded in ordinary web pages.

Digital Me asks a different question entirely:

What if a personal AI agent were built from the ground up on verifiable identity, cryptographic security boundaries, and user sovereignty over data?

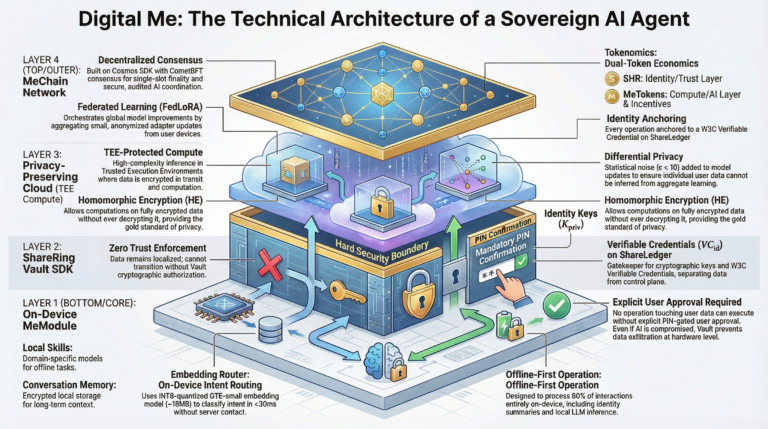

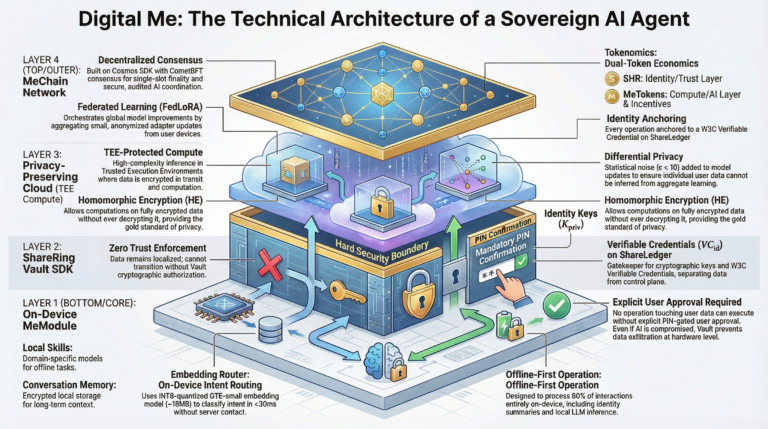

Built on ShareRing’s production self-sovereign identity (SSI) infrastructure – including ShareLedger (a Cosmos SDK app-chain with CometBFT consensus), W3C Verifiable Credentials, zero-knowledge soulbound tokens, and the ShareRing Vault SDK – Digital Me introduces a fundamentally different architecture. Every operation that touches user data requires cryptographic user confirmation hardcoded at the SDK level, not enforced by the AI. Skills execute in sandboxed environments with capability-based permissions. An on-device embedding router classifies intent in under 30 milliseconds without sending data to any server. And the entire system is open source, auditable, and extensible.

This whitepaper is the culmination of 18 months of research, development, and rigorous technical verification. Every architectural decision, privacy technique, and security mechanism described in these pages has been tested against real-world constraints and validated for production feasibility.

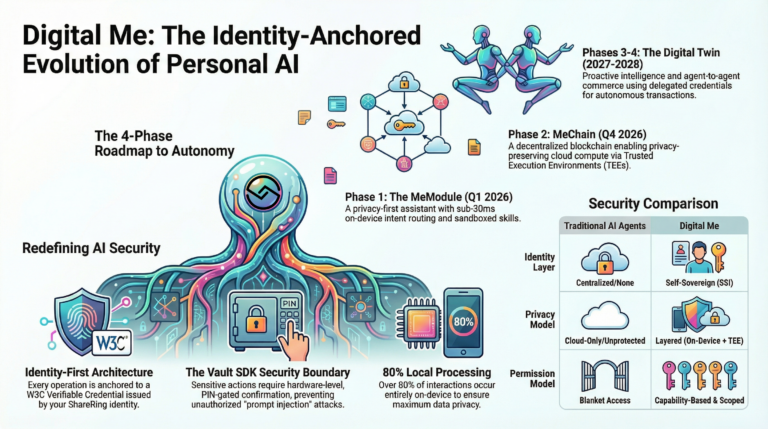

This whitepaper presents the complete technical architecture for Digital Me across four progressive phases:

Phase 1 – Q1 2026

The Digital Me MeModule

An open-source, privacy-first AI assistant with embedding-based intent routing, a curated skills marketplace, and user-chosen LLM backends.

Phase 2 – Q3-Q4 2026

MeChain & Decentralized AI

A purpose-built Cosmos SDK blockchain for privacy-preserving AI inference using TEEs, differential privacy, and zero-knowledge proofs.

Phase 3 – 2027

Adaptive Intelligence

On-device RAG and federated LoRA fine-tuning – a truly proactive agent that anticipates needs and acts on your behalf.

Phase 4 – 2027-2028

The Autonomous Digital Twin

Agent-to-agent commerce, verifiable credential delegation, cross-chain identity, and a self-sustaining digital economy.

The convergence of three technology trends makes this vision achievable for the first time: flagship smartphones now run 3B-parameter models at 60-70 tokens per second, federated LoRA fine-tuning enables per-user personalization with adapters under 10 MB, and W3C Verifiable Credentials v2.0 became a full W3C Recommendation in May 2025. No other project combines self-sovereign identity, privacy-preserving AI, and decentralized compute into a unified consumer product. ShareRing’s existing SSI infrastructure – already holding UK DIATF certification and selected for Australia’s $6.5M age assurance trial – provides a non-replicable foundation that every competitor lacks.

2. The Problem: AI Agents Without Identity

2.1 The AI Agent Explosion

The transition from chatbots to autonomous agents represents the most significant shift in computing since the smartphone. Gartner projects AI agents will command $15 trillion in B2B purchasing decisions by 2028. Google’s A2A (Agent-to-Agent) protocol, Anthropic’s Model Context Protocol (MCP), and the DIF Trusted Agents Working Group all launched in 2025, signalling that the infrastructure layer for agent-based computing is being built now. Every major technology company – Apple, Google, Microsoft, Meta, Amazon – has shipped or announced personal AI agent products.

Yet every existing approach shares a fundamental architectural flaw: the AI agent has no verifiable identity. It cannot prove who it represents. It cannot cryptographically demonstrate what it is authorized to do. It cannot provide auditable evidence that a specific model produced a specific output. And critically, it cannot selectively disclose user attributes without revealing the underlying data.

2.2 The Identity Gap in Current AI Agents

Consider the following scenario: you ask your AI agent to book a hotel that requires identity verification. Today’s agents must either (a) send your full passport data to the hotel’s system – a massive privacy violation – or (b) fail to complete the task. There is no mechanism for the agent to present a verifiable credential proving “this person is over 18 and holds a valid Australian passport” without revealing the passport itself. This is the identity gap.

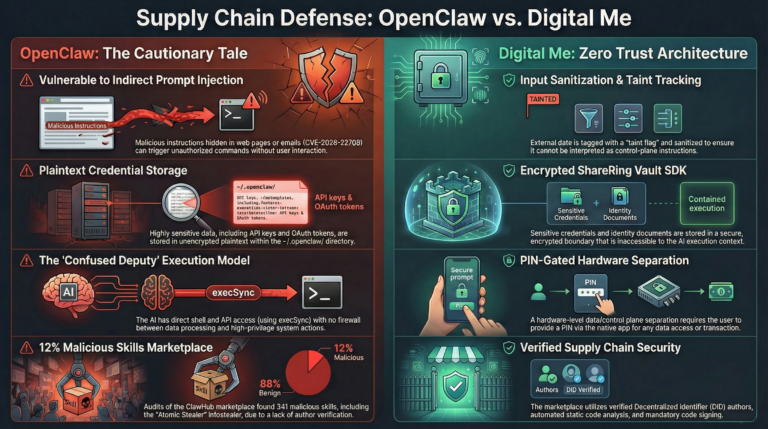

The gap extends beyond identity verification. Without cryptographic delegation, there is no way to scope what an AI agent can do. OpenClaw’s architecture grants the agent blanket access to everything: files, emails, browsing history, credentials, shell commands. The user must trust the AI’s natural language understanding to be the security boundary. This is equivalent to giving every application on your phone root access and hoping they behave.

2.3 The Privacy Paradox

The most useful AI agent is one that knows everything about you. The most secure AI agent is one that knows nothing. Every existing product resolves this tension by choosing usefulness over security: Apple Intelligence processes your data on-device but within Apple’s closed ecosystem with no data portability. Cloud-based assistants like ChatGPT, Claude, and Gemini process your data on third-party servers subject to subpoena, policy changes, and breaches. OpenClaw processes data locally but with no security boundary between data and execution.

Digital Me resolves this paradox through a layered architecture where: (1) the most sensitive operations happen entirely on-device with no network communication, (2) operations requiring more compute use privacy-preserving techniques (TEEs, differential privacy, homomorphic encryption) to process data without exposing it, and (3) the user’s self-sovereign identity provides the trust anchor that binds all operations to a verified human with cryptographically scoped permissions.

2.4 The OpenClaw Cautionary Tale

OpenClaw’s meteoric rise and equally rapid security implosion provides the definitive case study for why a fundamentally different architecture is required. The project – originally named Clawdbot, renamed to Moltbot after a trademark dispute with Anthropic, then again to OpenClaw – demonstrated that users desperately want autonomous AI agents. It also demonstrated that building them without identity, security boundaries, and cryptographic controls is reckless.

2.4.1 Critical Vulnerabilities

Five CVEs were disclosed within weeks of OpenClaw going viral. CVE-2026-25253 (CVSS 8.8) enabled one-click remote code execution via cross-site WebSocket hijacking – simply visiting a malicious webpage was sufficient to gain full gateway access, disable the sandbox, escape Docker containers, and achieve host-level code execution. CVE-2026-22708 documented indirect prompt injection via web browsing, where CSS-hidden instructions on malicious webpages caused the agent to execute arbitrary commands without any user interaction. Two additional command injection CVEs (CVE-2026-25156 and CVE-2026-25157) were disclosed in the same week.

2.4.2 Systemic Architectural Failures

Independent security audits revealed problems far deeper than individual bugs. All credentials – API keys, OAuth tokens, WhatsApp credentials, conversation memories – are stored in plaintext under ~/.openclaw/. The codebase contains eval() 100 times and execSync() 9 times. Removed credentials are merely backed up rather than securely deleted. The “security” of the sandbox is enforced by the same API that the AI agent can modify – meaning a prompt injection can simply disable the sandbox before executing malicious code.

Palo Alto Networks identified what they termed the “lethal trifecta plus one”: access to private data (emails, files, credentials, browsing history), exposure to untrusted content (web pages, incoming messages, third-party skills), ability to communicate externally (send emails, make API calls, post messages), and persistent memory (SOUL.md and MEMORY.md files that enable time-shifted prompt injection, memory poisoning, and logic-bomb attacks where malicious payloads are fragmented across time and detonated when conditions align).

2.4.3 Supply Chain Catastrophe

The skills marketplace proved equally devastating. Koi Security audited 2,857 skills on ClawHub and found 341 malicious ones (approximately 12%), distributing the Atomic Stealer macOS infostealer in a campaign codenamed “ClawHavoc.” Over 400 malicious skills appeared between January 27 and February 2, 2026. Cisco’s analysis of a single skill – “What Would Elon Do?” – revealed nine security findings including two critical: active data exfiltration via silent curl commands and direct prompt injection to bypass safety guidelines. CrowdStrike documented an indirect prompt injection attack already active in the wild on Moltbook (the AI-to-AI social network) attempting to drain cryptocurrency wallets – the attacker never interacted with OpenClaw directly, instead poisoning the data environment.

Key Insight

These failures are not fixable with patches. They reflect fundamental architectural choices – a privileged service account manipulable via natural language with no firewall between data and control planes – that Digital Me avoids by design.

3. Introducing Digital Me

3.1 Vision

Digital Me is an AI-powered personal agent that knows who you are, acts on your behalf, and never compromises your privacy or security. It is not a chatbot. It is not an assistant confined to a single platform. It is your digital twin – a persistent, identity-anchored AI entity that extends your capabilities into the digital world, backed by the cryptographic guarantees of self-sovereign identity and the transparency of open-source code.

The core design principles that separate Digital Me from every other AI agent:

Identity First

Every operation is anchored to a W3C Verifiable Credential issued by your ShareRing identity. The agent cannot act without proving its authority. Service providers can verify the chain of delegation back to a real, verified human.

Zero Trust by Default

No operation that touches user data executes without explicit, cryptographic user confirmation – enforced at the SDK level, not by the AI. Even if the AI is compromised via prompt injection, the ShareRing Vault SDK requires PIN-gated user approval.

Privacy by Architecture

Over 80% of interactions are processed entirely on-device with no network communication. When cloud compute is required, privacy-preserving techniques ensure data is never exposed in plaintext.

Open and Auditable

The entire codebase is open source. Skills are reviewed for security before publication. Every architectural decision is documented and verifiable. There are no black boxes.

User Sovereignty

Users choose their LLM provider (cloud or self-hosted), control what data the agent can access, and can revoke all agent permissions instantly. Data never leaves user control without explicit consent.

3.2 Architecture Overview

Digital Me’s architecture is organized into four concentric layers, each providing distinct security and privacy guarantees:

| Layer | Function | Privacy Guarantee |

|---|---|---|

| On-Device Core | Embedding router, local skills, conversation memory, on-device LLM | Zero data leaves the device |

| ShareRing Vault SDK | Identity credentials, transaction signing, encrypted storage, selective disclosure | PIN-gated, cryptographic user confirmation for all operations |

| Privacy-Preserving Cloud | Complex inference via TEE-protected compute, differential privacy, homomorphic encryption | Data encrypted in transit and during computation; no plaintext exposure |

| MeChain Network | Decentralized AI coordination, model registry, federated learning, token economics | Blockchain-verified, community-audited, mathematically proven privacy |

3.3 Technology Stack

The Digital Me MeModule is built with a modern, production-grade technology stack designed for offline-first operation within the ShareRing Me app ecosystem:

4. Phase 1: The Digital Me MeModule

Phase 1 delivers a fully functional, privacy-first AI assistant as a MeModule within the ShareRing Me app. This is the foundation upon which all subsequent phases build. The MVP target is a working demonstration within two weeks of the release of this whitepaper, with a full public release following security audit.

4.1 Embedding-Based Intent Routing

The core innovation of Digital Me’s Phase 1 architecture is the separation of intent classification from language generation. Rather than sending every user message to an LLM for interpretation – which introduces latency, cost, and privacy exposure – Digital Me uses an on-device embedding model to classify user intent in under 30 milliseconds, then routes to the appropriate skill handler.

4.1.1 The Embedding Router Pipeline

When a user sends a message, the following pipeline executes entirely on-device:

The user’s message is embedded using an INT8-quantized GTE-small model (~18 MB, 384-dimensional vectors) via ONNX Runtime with XNNPACK acceleration, completing in 10-30ms on modern ARM SoCs.

The resulting embedding vector is compared via cosine similarity against pre-computed centroid embeddings for each registered skill category.

If the highest similarity score exceeds 0.85 (high-confidence), the message is routed directly to the matching skill handler – without LLM involvement.

If the score falls between 0.80-0.85 (medium-confidence), the user is presented with a confirmation: “Did you mean to [action]?”

If the score is below 0.80, the message is routed to the general conversation handler (LLM).

This tiered routing approach achieves 92-96% precision on intent classification benchmarks while reducing average response latency from ~5,000ms (full LLM round-trip) to under 100ms for skill-routable requests. Critically, for the majority of routine interactions – checking balance, viewing documents, sharing credentials – no data needs to leave the device at all.

4.1.2 Adaptive Embedding Strategy

Digital Me implements a tiered embedding strategy to optimize for both speed and accuracy:

| Tier | Model | Size | Latency | Use Case |

|---|---|---|---|---|

| Tier 0 (Pre-filter) | Model2Vec distilled | 8 MB | <0.1ms | Instant coarse filtering of obvious intents |

| Tier 1 (Primary) | INT8 GTE-small | 18 MB | 10-20ms | Standard intent classification for all queries |

| Tier 2 (Precision) | EmbeddingGemma-300M | <200 MB | 15-40ms | Disambiguation of complex or ambiguous queries |

The Tier 0 Model2Vec distillation compresses the full MiniLM model into a static 8 MB model that runs in under 0.1 milliseconds, retaining approximately 90% of classification accuracy. This is used for sub-millisecond pre-filtering of obvious intents before engaging the more expensive Tier 1 model.

4.2 The Skills Architecture

Skills are the fundamental unit of capability in Digital Me. Each skill is a self-contained, sandboxed module that performs a specific action on behalf of the user. Skills are inspired by the Agent Skills pattern formalized by Anthropic (October 2025) and adopted as an industry standard under the Linux Foundation’s Agentic AI Foundation – but with critical security enhancements that address the supply chain vulnerabilities exposed by OpenClaw’s ClawHub disaster.

4.2.1 Skill Structure

Each skill is defined by a SKILL.md manifest file containing YAML frontmatter that specifies a unique skill identifier and version, human-readable name and description, required permissions (declared capabilities), trigger patterns (semantic descriptions), input/output schemas, author identity and ShareRing verification status, and a cryptographic hash of the skill code for integrity verification.

4.2.2 Capability-Based Permission Model

Digital Me implements a capability-based permission system that eliminates blanket access entirely. Each skill must declare the specific ShareRing bridge methods it requires at registration time. The skill runtime enforces these declarations at execution time – a skill that declares it needs getBalance() cannot call signTransaction(), regardless of what its code attempts to do.

| Tier | Capabilities | User Approval | Examples |

|---|---|---|---|

| Read-Only | getAppInfo, getDeviceInfo, readStorage | None (pre-approved) | Device info, app settings, cached preferences |

| Identity Read | getDocuments, getEmail, getAvatar | One-time approval | View documents, retrieve email address |

| Identity Share | execQuery (selective disclosure) | Per-request PIN | Share credentials with third parties |

| Financial Read | getBalance, getMainAccount | One-time approval | View wallet balance, account info |

| Financial Write | signTransaction, signAndBroadcast | Per-request PIN + confirmation | Send tokens, sign messages |

| Cryptographic | encrypt, decrypt, sign | Per-request PIN | Encrypt/decrypt data, sign messages |

4.2.3 Built-In Skills Library

Digital Me ships with a comprehensive library of built-in skills. Each skill has been security audited and is maintained by ShareRing:

Identity & Verification

whoami

Retrieve and summarize verified identity from the Vault

documents

List, search, and describe Vault documents with expiry tracking

share-credential

Selective disclosure of identity attributes without revealing documents

verify-age

Zero-knowledge age verification via ZkVCT protocol

document-expiry

Proactive alerts for approaching document expirations

identity-backup

Encrypted backup with recovery key management

Financial & Wallet

balance

Check balances across all token types with fiat conversion

send

Prepare, review, and sign transfer transactions with fee estimates

stake

View positions, rewards, and manage validator delegation

swap

Token swaps via DEX aggregators with MEV resistance

spending-tracker

Auto categorization and visualization with budget alerts

payment-request

Generate QR codes or deep links for receiving payments

Communication & Productivity

draft-email

Context-aware email composition with tone adjustment

summarize

Summarize documents, web pages, or threads with adjustable detail

translate

On-device translation with cloud fallback for rare languages

calendar-assist

Schedule management, conflict detection, and meeting prep

Travel & Lifestyle

hotel-check-in

Digital check-in using verifiable credentials and selective disclosure

travel-checklist

Auto-generated checklists with visa status and document requirements

flight-status

Proactive notifications for delays, gate changes, and rebooking

expense-report

Auto expense categorization from transactions and receipt photos

Commerce & Shopping

product-search

Find products across retailers with natural language queries

purchase-assist

Complete purchases with zero manual data entry via selective disclosure

price-tracker

Monitor prices and alert when items drop below thresholds

subscription-manager

Monitor renewals, price changes, and unused services

Security & Privacy

security-audit · privacy-report · credential-revoke · data-export · breach-monitor

DeFi & Web3

gas-optimizer · portfolio-overview · governance-vote · nft-manager · bridge-assist

Health & Wellbeing

health-record · vaccination-proof · insurance-claim

Education & Professional

credential-verify · resume-builder · skill-assessment

4.2.4 Community Skills Marketplace

Beyond the built-in library, Digital Me supports a curated community skills marketplace with a rigorous 6-step security review process designed to prevent supply chain attacks:

Automated Static Analysis

Scanned for vulnerability patterns, suspicious API calls, network requests, and data exfiltration attempts.

Capability Verification

Declared permissions compared against actual code behavior. Skills exceeding declarations are rejected.

Prompt Injection Testing

Automated adversarial testing using Giskard’s red-teaming framework.

Manual Security Audit

ShareRing’s security team performs manual review focusing on data handling and abuse vectors.

Author Identity Verification

Skill authors must have a verified ShareRing identity. Malicious authors can be permanently banned.

Code Signing & Integrity

Published skills are cryptographically signed. Any modification invalidates the signature.

4.3 Prompt Injection Defense Architecture

Prompt injection is the single greatest threat to AI agent security. Digital Me implements a multi-layered defense-in-depth strategy:

4.3.1 Hardware-Level Data/Control Plane Separation

The most critical architectural decision in Digital Me is the separation of the data plane from the control plane at the SDK level. The ShareRing Vault SDK enforces that any operation that reads user data, shares credentials, signs transactions, or accesses encrypted storage requires explicit, PIN-gated user confirmation. This confirmation flow is implemented in the native ShareRing Me app, outside the MeModule’s WebView sandbox. Even if the AI’s context is fully compromised by a prompt injection attack, the attacker cannot read Vault documents without the user’s PIN, share credentials without the user seeing exactly what will be disclosed, sign transactions without PIN entry, or access encrypted data without PIN-gated decryption.

The Fundamental Difference

Security is not enforced by the AI’s good behavior or by configuration that the AI can modify. It is enforced by hardware-backed cryptographic mechanisms in the native application layer.

4.3.2 Input Sanitization and Taint Tracking

All data entering Digital Me from external sources (web pages, emails, messages, third-party APIs) is tagged with a taint flag. Tainted data is processed for information extraction but cannot be used as control-plane instructions. This prevents the “confused deputy” attack where malicious data sources embed instructions that the AI interprets as user commands.

4.3.3 Skill Isolation and Least Privilege

Each skill executes in a sandboxed JavaScript context with access only to its declared capabilities. Skills cannot access other skills’ state, cannot modify the agent’s core memory, and cannot make network requests unless explicitly declared and approved.

4.3.4 Anomaly Detection and Rate Limiting

Digital Me monitors for anomalous patterns that may indicate prompt injection attempts: rapid sequential skill invocations, requests for capabilities the conversation context doesn’t justify, attempts to access credentials outside the user’s normal patterns, and natural language patterns known to be associated with injection attacks.

4.4 LLM Backend Flexibility

Digital Me does not lock users into a single AI provider:

| Option | Privacy | Performance | Cost | Best For |

|---|---|---|---|---|

| On-Device (Gemma 3n E2B) | Maximum – zero data leaves device | 2B params, ~50 tok/s | Free | Privacy-maximizers, offline use |

| On-Device (Gemma 3n E4B) | Maximum – zero data leaves device | 4B params, ~30 tok/s | Free | Flagship device users |

| Self-Hosted (Ollama/vLLM) | Very High – user controls server | Any model size | Infrastructure cost | Power users, enterprise |

| Cloud LLM (Claude/GPT-4) | Moderate – data sent to provider | State-of-art quality | Pay-per-use | Maximum intelligence |

| MeChain Network (Phase 2) | Very High – TEE-protected | Distributed compute | MeToken payment | Cloud quality + privacy |

A critical design principle: the MeModule itself ships lightweight at approximately 18 MB. On-device LLM support is an optional download (1-2 GB), similar to downloading an offline language pack. For routine skill-based operations, the embedding router handles everything in under 30 milliseconds with no LLM involvement at all.

4.5 Memory System

Digital Me maintains a persistent memory system using the ShareRing Me app’s AsyncStorage, enabling conversation continuity across sessions. The memory architecture is designed with privacy as the primary constraint: conversation history (last N messages, configurable, default 50) is stored locally with no server transmission; user preferences are stored as structured key-value pairs; identity cache stores non-sensitive summaries for quick access; and skill state is isolated per-skill and cleared on uninstall. All stored data is encrypted using the ShareRing Vault’s encryption capabilities.

4.6 Open Source Commitment

The entire Digital Me MeModule codebase will be published on ShareRing’s GitHub repository under an open-source license upon public release. This includes the complete MeModule application code, the bridge layer implementation, the embedding router with pre-trained models, the agent core with skill orchestration logic, the complete built-in skills library, the marketplace review tooling, and comprehensive documentation with security audit reports.

5. Phase 2: MeChain – Decentralized Privacy-Preserving AI

While Phase 1 gives users a fully functional, privacy-first AI assistant, it leaves a fundamental tension unresolved: on-device models are limited in capability, and cloud models require trusting a centralized provider with your data. Phase 2 resolves this tension by introducing MeChain – a purpose-built Cosmos SDK blockchain that coordinates decentralized, privacy-preserving AI inference.

5.1 MeChain Architecture

MeChain is a Cosmos SDK appchain built on CometBFT consensus, inheriting single-slot finality, ABCI++ VoteExtensions for validator-attested off-chain compute verification, CosmWasm smart contracts, and native IBC connectivity to 115+ chains with $58B+ market cap and zero exploits since April 2021.

5.1.1 Consensus and Validation

MeChain extends CometBFT consensus with AI-specific validation mechanics. Validators are not merely block producers – they are compute providers who attest to the correctness of AI inference results. Target specifications: 5,000 TPS sustained throughput, sub-500ms block times, BlockSTM parallel transaction execution, and sub-second finality for inference requests.

5.1.2 AI-Specific Validation Mechanics

The validation layer moves the security boundary away from the AI’s natural language understanding and into cryptographic and hardware-enforced controls. The core mechanisms include Zero Trust by Default with cryptographic confirmation at the Vault SDK level, Hardware-Level Data/Control Plane Separation, Input Sanitization and Taint Tracking, Skill Isolation and Least Privilege with capability-based permission enforcement, and continuous Anomaly Detection and Rate Limiting.

5.1.3 Privacy-Preserving Inference

Trusted Execution Environments (TEEs) – Primary Method. TEE-based inference is the production-ready approach, offering near-native performance with hardware-enforced isolation. NVIDIA’s Blackwell B200 GPUs deliver TEE-I/O capability with inline NVLink encryption at nearly identical throughput. On MeChain, compute providers run inference within TEE enclaves. The user’s query is encrypted end-to-end; the compute provider’s operating system never sees the plaintext data.

Zero-Knowledge Machine Learning (zkML) – For Verifiable Inference. zkML enables proving that a specific model produced a specific output without revealing the inputs. zkLLM (CCS 2024) proved LLaMA-2-13B inference in approximately 15 minutes with proof size under 200 KB. Lagrange Labs’ DeepProve-1 achieved the first production-ready proof of full GPT-2 inference at 158x faster than previous approaches.

5.2 Decentralized Compute Marketplace

MeChain operates a decentralized marketplace where compute providers register hardware capabilities with staked SHR as collateral, a decentralized model registry provides on-chain attestation of model weights and provenance, Digital Me clients select providers based on latency, cost, TEE certification, and stake amount, validators periodically challenge providers to verify inference quality, and IBC interoperability connects to Akash Network, Nillion, and ShareLedger.

4.2 The Skills Architecture

Skills are the fundamental unit of capability in Digital Me. Each skill is a self-contained, sandboxed module that performs a specific action on behalf of the user. Skills are inspired by the Agent Skills pattern formalized by Anthropic (October 2025) and adopted as an industry standard under the Linux Foundation’s Agentic AI Foundation – but with critical security enhancements that address the supply chain vulnerabilities exposed by OpenClaw’s ClawHub disaster.

4.2.1 Skill Structure

Each skill is defined by a SKILL.md manifest file containing YAML frontmatter that specifies a unique skill identifier and version, human-readable name and description, required permissions (declared capabilities), trigger patterns (semantic descriptions), input/output schemas, author identity and ShareRing verification status, and a cryptographic hash of the skill code for integrity verification.

4.2.2 Capability-Based Permission Model

Digital Me implements a capability-based permission system that eliminates blanket access entirely. Each skill must declare the specific ShareRing bridge methods it requires at registration time. The skill runtime enforces these declarations at execution time – a skill that declares it needs getBalance() cannot call signTransaction(), regardless of what its code attempts to do.

| Tier | Capabilities | User Approval | Examples |

|---|---|---|---|

| Read-Only | getAppInfo, getDeviceInfo, readStorage | None (pre-approved) | Device info, app settings, cached preferences |

| Identity Read | getDocuments, getEmail, getAvatar | One-time approval | View documents, retrieve email address |

| Identity Share | execQuery (selective disclosure) | Per-request PIN | Share credentials with third parties |

| Financial Read | getBalance, getMainAccount | One-time approval | View wallet balance, account info |

| Financial Write | signTransaction, signAndBroadcast | Per-request PIN + confirmation | Send tokens, sign messages |

| Cryptographic | encrypt, decrypt, sign | Per-request PIN | Encrypt/decrypt data, sign messages |

4.2.3 Built-In Skills Library

Digital Me ships with a comprehensive library of built-in skills. Each skill has been security audited and is maintained by ShareRing:

Identity & Verification

whoami

Retrieve and summarize verified identity from the Vault

documents

List, search, and describe Vault documents with expiry tracking

share-credential

Selective disclosure of identity attributes without revealing documents

verify-age

Zero-knowledge age verification via ZkVCT protocol

document-expiry

Proactive alerts for approaching document expirations

identity-backup

Encrypted backup with recovery key management

Financial & Wallet

balance

Check balances across all token types with fiat conversion

send

Prepare, review, and sign transfer transactions with fee estimates

stake

View positions, rewards, and manage validator delegation

swap

Token swaps via DEX aggregators with MEV resistance

spending-tracker

Auto categorization and visualization with budget alerts

payment-request

Generate QR codes or deep links for receiving payments

Communication & Productivity

draft-email

Context-aware email composition with tone adjustment

summarize

Summarize documents, web pages, or threads with adjustable detail

translate

On-device translation with cloud fallback for rare languages

calendar-assist

Schedule management, conflict detection, and meeting prep

Travel & Lifestyle

hotel-check-in

Digital check-in using verifiable credentials and selective disclosure

travel-checklist

Auto-generated checklists with visa status and document requirements

flight-status

Proactive notifications for delays, gate changes, and rebooking

expense-report

Auto expense categorization from transactions and receipt photos

Commerce & Shopping

product-search

Find products across retailers with natural language queries

purchase-assist

Complete purchases with zero manual data entry via selective disclosure

price-tracker

Monitor prices and alert when items drop below thresholds

subscription-manager

Monitor renewals, price changes, and unused services

Security & Privacy

security-audit · privacy-report · credential-revoke · data-export · breach-monitor

DeFi & Web3

gas-optimizer · portfolio-overview · governance-vote · nft-manager · bridge-assist

Health & Wellbeing

health-record · vaccination-proof · insurance-claim

Education & Professional

credential-verify · resume-builder · skill-assessment

4.2.4 Community Skills Marketplace

Beyond the built-in library, Digital Me supports a curated community skills marketplace with a rigorous 6-step security review process designed to prevent supply chain attacks:

Automated Static Analysis

Scanned for vulnerability patterns, suspicious API calls, network requests, and data exfiltration attempts.

Capability Verification

Declared permissions compared against actual code behavior. Skills exceeding declarations are rejected.

Prompt Injection Testing

Automated adversarial testing using Giskard’s red-teaming framework.

Manual Security Audit

ShareRing’s security team performs manual review focusing on data handling and abuse vectors.

Author Identity Verification

Skill authors must have a verified ShareRing identity. Malicious authors can be permanently banned.

Code Signing & Integrity

Published skills are cryptographically signed. Any modification invalidates the signature.

4.3 Prompt Injection Defense Architecture

Prompt injection is the single greatest threat to AI agent security. Digital Me implements a multi-layered defense-in-depth strategy:

4.3.1 Hardware-Level Data/Control Plane Separation

The most critical architectural decision in Digital Me is the separation of the data plane from the control plane at the SDK level. The ShareRing Vault SDK enforces that any operation that reads user data, shares credentials, signs transactions, or accesses encrypted storage requires explicit, PIN-gated user confirmation. This confirmation flow is implemented in the native ShareRing Me app, outside the MeModule’s WebView sandbox. Even if the AI’s context is fully compromised by a prompt injection attack, the attacker cannot read Vault documents without the user’s PIN, share credentials without the user seeing exactly what will be disclosed, sign transactions without PIN entry, or access encrypted data without PIN-gated decryption.

The Fundamental Difference

Security is not enforced by the AI’s good behavior or by configuration that the AI can modify. It is enforced by hardware-backed cryptographic mechanisms in the native application layer.

4.3.2 Input Sanitization and Taint Tracking

All data entering Digital Me from external sources (web pages, emails, messages, third-party APIs) is tagged with a taint flag. Tainted data is processed for information extraction but cannot be used as control-plane instructions. This prevents the “confused deputy” attack where malicious data sources embed instructions that the AI interprets as user commands.

4.3.3 Skill Isolation and Least Privilege

Each skill executes in a sandboxed JavaScript context with access only to its declared capabilities. Skills cannot access other skills’ state, cannot modify the agent’s core memory, and cannot make network requests unless explicitly declared and approved.

4.3.4 Anomaly Detection and Rate Limiting

Digital Me monitors for anomalous patterns that may indicate prompt injection attempts: rapid sequential skill invocations, requests for capabilities the conversation context doesn’t justify, attempts to access credentials outside the user’s normal patterns, and natural language patterns known to be associated with injection attacks.

4.4 LLM Backend Flexibility

Digital Me does not lock users into a single AI provider:

| Option | Privacy | Performance | Cost | Best For |

|---|---|---|---|---|

| On-Device (Gemma 3n E2B) | Maximum – zero data leaves device | 2B params, ~50 tok/s | Free | Privacy-maximizers, offline use |

| On-Device (Gemma 3n E4B) | Maximum – zero data leaves device | 4B params, ~30 tok/s | Free | Flagship device users |

| Self-Hosted (Ollama/vLLM) | Very High – user controls server | Any model size | Infrastructure cost | Power users, enterprise |

| Cloud LLM (Claude/GPT-4) | Moderate – data sent to provider | State-of-art quality | Pay-per-use | Maximum intelligence |

| MeChain Network (Phase 2) | Very High – TEE-protected | Distributed compute | MeToken payment | Cloud quality + privacy |

A critical design principle: the MeModule itself ships lightweight at approximately 18 MB. On-device LLM support is an optional download (1-2 GB), similar to downloading an offline language pack. For routine skill-based operations, the embedding router handles everything in under 30 milliseconds with no LLM involvement at all.

4.5 Memory System

Digital Me maintains a persistent memory system using the ShareRing Me app’s AsyncStorage, enabling conversation continuity across sessions. The memory architecture is designed with privacy as the primary constraint: conversation history (last N messages, configurable, default 50) is stored locally with no server transmission; user preferences are stored as structured key-value pairs; identity cache stores non-sensitive summaries for quick access; and skill state is isolated per-skill and cleared on uninstall. All stored data is encrypted using the ShareRing Vault’s encryption capabilities.

4.6 Open Source Commitment

The entire Digital Me MeModule codebase will be published on ShareRing’s GitHub repository under an open-source license upon public release. This includes the complete MeModule application code, the bridge layer implementation, the embedding router with pre-trained models, the agent core with skill orchestration logic, the complete built-in skills library, the marketplace review tooling, and comprehensive documentation with security audit reports.

5. Phase 2: MeChain – Decentralized Privacy-Preserving AI

While Phase 1 gives users a fully functional, privacy-first AI assistant, it leaves a fundamental tension unresolved: on-device models are limited in capability, and cloud models require trusting a centralized provider with your data. Phase 2 resolves this tension by introducing MeChain – a purpose-built Cosmos SDK blockchain that coordinates decentralized, privacy-preserving AI inference.

5.1 MeChain Architecture

MeChain is a Cosmos SDK appchain built on CometBFT consensus, inheriting single-slot finality, ABCI++ VoteExtensions for validator-attested off-chain compute verification, CosmWasm smart contracts, and native IBC connectivity to 115+ chains with $58B+ market cap and zero exploits since April 2021.

5.1.1 Consensus and Validation

MeChain extends CometBFT consensus with AI-specific validation mechanics. Validators are not merely block producers – they are compute providers who attest to the correctness of AI inference results. Target specifications: 5,000 TPS sustained throughput, sub-500ms block times, BlockSTM parallel transaction execution, and sub-second finality for inference requests.

5.1.2 AI-Specific Validation Mechanics

The validation layer moves the security boundary away from the AI’s natural language understanding and into cryptographic and hardware-enforced controls. The core mechanisms include Zero Trust by Default with cryptographic confirmation at the Vault SDK level, Hardware-Level Data/Control Plane Separation, Input Sanitization and Taint Tracking, Skill Isolation and Least Privilege with capability-based permission enforcement, and continuous Anomaly Detection and Rate Limiting.

5.1.3 Privacy-Preserving Inference

Trusted Execution Environments (TEEs) – Primary Method. TEE-based inference is the production-ready approach, offering near-native performance with hardware-enforced isolation. NVIDIA’s Blackwell B200 GPUs deliver TEE-I/O capability with inline NVLink encryption at nearly identical throughput. On MeChain, compute providers run inference within TEE enclaves. The user’s query is encrypted end-to-end; the compute provider’s operating system never sees the plaintext data.

Zero-Knowledge Machine Learning (zkML) – For Verifiable Inference. zkML enables proving that a specific model produced a specific output without revealing the inputs. zkLLM (CCS 2024) proved LLaMA-2-13B inference in approximately 15 minutes with proof size under 200 KB. Lagrange Labs’ DeepProve-1 achieved the first production-ready proof of full GPT-2 inference at 158x faster than previous approaches.

5.2 Decentralized Compute Marketplace

MeChain operates a decentralized marketplace where compute providers register hardware capabilities with staked SHR as collateral, a decentralized model registry provides on-chain attestation of model weights and provenance, Digital Me clients select providers based on latency, cost, TEE certification, and stake amount, validators periodically challenge providers to verify inference quality, and IBC interoperability connects to Akash Network, Nillion, and ShareLedger.

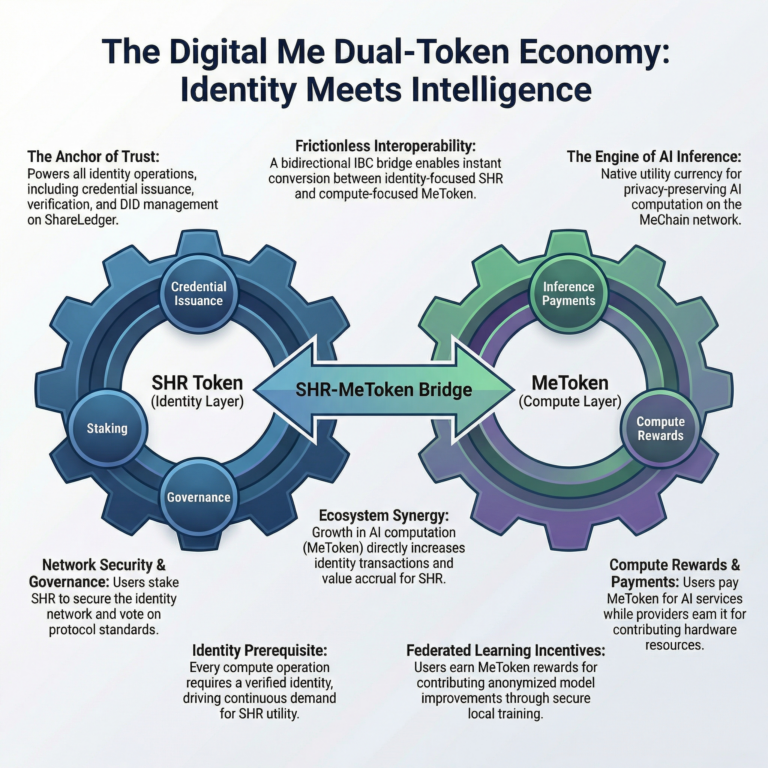

5.3 Dual-Token Economics: SHR and MeToken

The Digital Me ecosystem introduces a dual-token model that separates the identity and trust layer from the compute layer, with each token serving a distinct and complementary function.

SHR (ShareToken): The Identity & Trust Layer

SHR remains the native token of ShareLedger – the economic backbone of identity, trust, and governance:

Identity Operations

Credential issuance, verification, revocation, and selective disclosure

Validator Staking

Validators stake SHR to secure the identity network via CometBFT

Governance

Protocol upgrades, identity standards, and trust framework parameters

Skills Marketplace

Developers earn SHR when users activate their published skills

MeToken: The Compute & AI Layer

MeToken is the native utility token of MeChain, purpose-built for decentralized AI computation:

Inference Payments

Users pay MeToken for privacy-preserving cloud inference

Provider Staking

Collateral for compute marketplace with slashing for misbehavior

Federated Learning

Rewards for anonymized model improvements via federated learning

MeChain Governance

Model registry, compute pricing, privacy requirements, upgrades

5.3.3 Token Synergy and Value Accrual

The dual-token model is designed so MeToken increases the utility and demand for SHR through reinforcing mechanisms: every MeChain operation requires a verified ShareLedger identity (DID) – more MeChain activity drives more ShareLedger transactions; all skills marketplace earnings flow through SHR; cross-chain verification fees consume SHR; and SHR holders govern the foundational identity layer that MeChain depends on.

In Summary

SHR secures the trust layer that makes everything possible, while MeToken powers the compute layer that makes everything useful. Neither can function without the other, and growth in either token drives demand for both.

5.3.4 MeToken Airdrop to ShareLedger Stakers

To bootstrap the MeChain ecosystem, ShareRing will conduct a one-time airdrop of MeToken to participants actively holding or staking SHR on ShareLedger at snapshot time. Eligible: SHR holders and stakers with tokens on ShareLedger (native chain), including wallet holdings, validator delegations, and governance participation. Not eligible: SHR held on centralized exchanges or as ERC-20 tokens on Ethereum. Only native ShareLedger holdings qualify.

5.3.5 SHR-MeToken Bridge

A dedicated bridge contract enables immediate, frictionless conversion between SHR and MeToken via IBC transfer, with conversion rates determined by an on-chain automated market maker (AMM) pool ensuring continuous liquidity and transparent pricing.

6. Phase 3: Adaptive Intelligence – RAG & Personalized Learning

Phase 3 transforms Digital Me from a reactive assistant into a proactive agent that learns from and adapts to each user through on-device RAG and federated LoRA fine-tuning – all while maintaining strict privacy guarantees.

6.1 On-Device RAG Architecture

Digital Me constructs a personal knowledge graph from user interactions, capturing entities, relationships, temporal patterns, and preferences – stored entirely on-device in an encrypted SQLite database with vector indexing, never transmitted to any server. The Adaptive RAG Pipeline implements Google AI Edge’s on-device framework with automatic privacy-aware chunking, GTE-small embedding, HNSW-indexed vector search for sub-millisecond retrieval, and intelligent context window management (32K tokens for Gemma 3n).

6.2 Federated LoRA Fine-Tuning

LoRA enables personalizing an LLM by training small adapter matrices (1-10 MB) rather than modifying full model weights. Digital Me continuously trains a personal LoRA adapter on-device during idle periods, learning communication style, frequently requested information, and typical workflows. The adapter is stored in the user’s encrypted Vault – representing the user’s “personality” for the AI.

Users who opt in to federated learning contribute anonymized adapter updates to the MeChain network using state-of-the-art techniques including FlexLoRA for heterogeneous-rank contributions, FedBuff for 3.3x more efficient async aggregation, ECOLORA for 40-70% bandwidth savings, differential privacy at ε < 10 with strong downstream performance, and Ditto Regularization for jointly optimized global and personalized models.

6.3 Proactive Intelligence

With RAG and LoRA personalization, Digital Me transitions to a proactive agent: personalized morning briefings, smart notification filtering, automated workflow suggestions, contextual credential preparation at known venues, financial spending insights, document lifecycle management, and predictive travel assistance – all assembled on-device.

7. Phase 4: The Autonomous Digital Twin

Phase 4 represents the full realization of Digital Me: an autonomous agent that can negotiate, transact, and represent you in the digital economy.

7.1 Verifiable Credential Delegation

Users issue W3C Verifiable Credentials to their Digital Me agent specifying scoped permissions, anchored on ShareLedger. Each delegation credential specifies the scope of permitted actions, financial limits, time-bounded validity, revocation conditions, and required user confirmation thresholds. Service providers verify the delegation chain back to the user’s DID.

7.2 Agent-to-Agent Commerce

Digital Me agents communicate using Google’s A2A protocol for discovery and task negotiation, Anthropic’s MCP for tool and data access, and DIDComm v2.1 for secure messaging. Scenarios include automated hotel booking with credential verification, credential-gated access to professional services, decentralized marketplace transactions with CosmWasm escrow, and cross-agent collaboration for group planning.

7.3 Cross-Chain Identity Interoperability

MeChain’s IBC connectivity enables Digital Me to operate across the entire Cosmos ecosystem and, via IBC v2 bridges, into Ethereum and Solana ecosystems – presenting verified identity on DeFi protocols, using credentials on freelancer marketplaces, or proving nationality on travel platforms from a single, user-controlled identity.

7.4 The Self-Sovereign AI Economy

Phase 4 enables a new economic model where users are active participants: data sovereignty with monetizable anonymized insights, skill authorship earnings in SHR, compute provision on MeChain, federated learning rewards, and configurable agent services (e.g., a professional translator’s Digital Me offering verified translation).

8. Security Architecture

Digital Me’s security architecture is designed from first principles to prevent the classes of attacks that devastated OpenClaw. Every design decision assumes the AI’s context will be compromised and ensures that compromise cannot lead to unauthorized data access or transactions.

8.1 Threat Model

| Threat | OpenClaw Status | Digital Me Defense |

|---|---|---|

| Direct prompt injection | Vulnerable – no input sanitization | Input taint tracking; embedding routing bypasses LLM for most operations |

| Indirect prompt injection | Catastrophic – CSS-hidden instructions trigger actions | Data/control plane separation at SDK level; tainted data cannot invoke skills |

| Credential theft | Plaintext storage under ~/.openclaw/ | PIN-encrypted Vault; credentials never accessible to MeModule code |

| Malicious skills | 12% of ClawHub skills were malicious | 6-step security review; DID-bound author identity; code signing |

| Token exfiltration | CVE-2026-25253: 1-click RCE | No exposed gateway; sandboxed WebView within native app |

| Memory poisoning | SOUL.md/MEMORY.md enable time-shifted attacks | Encrypted Vault memory; context cleared on anomaly detection |

| Privilege escalation | eval() x100, execSync() x9, sandbox bypass | No eval/exec; capability-based permissions; bridge-level enforcement |

| Cross-session leakage | Shared plaintext state across all sessions | Per-skill isolated state; encrypted storage; no shared global state |

8.2 The Vault SDK as Security Boundary

The ShareRing Vault SDK provides the hardware-backed security boundary that OpenClaw lacks entirely. All sensitive operations require explicit user PIN confirmation through the native app, outside the MeModule’s WebView. There is no API to suppress the confirmation dialog, auto-approve operations, or bypass PIN verification – these are hardcoded architectural constraints, not configuration options.

Worst-Case Scenario

Even if an attacker achieves complete control of the AI’s context via prompt injection, the maximum possible damage is: the AI can display misleading text in the chat interface and can attempt to request operations that the user must still approve. It cannot silently access, exfiltrate, or modify any user data.

8.3 Continuous Security Assurance

Digital Me implements automated red teaming with multi-turn prompt injection probes, dependency scanning with immediate patch deployment, a public bug bounty program covering all components, regular third-party security audits with published results, and on-device anomaly monitoring for unusual patterns.

9. Competitive Landscape

| Competitor | Data Ownership | Identity Layer | Privacy Model | Key Limitation |

|---|---|---|---|---|

| Apple Intelligence | Apple-controlled | Apple ID (centralized) | On-device + PCC | No data portability, closed ecosystem |

| OpenClaw | User-hosted | None | Local but unprotected | Catastrophic security failures |

| ChatGPT / Claude / Gemini | Provider-controlled | Centralized | Cloud-only | No privacy guarantees, no identity |

| Ollama / PrivateGPT | User-controlled | None | Fully local | No persistence, no identity, no sync |

| Fetch.ai / ASI Alliance | Network-controlled | Limited DID | Varies by service | No consumer product, complex UX |

| Digital Me ✦ | User-sovereign | W3C VCs on ShareLedger | Layered: device + TEE + HE + ZK | New entrant; must prove adoption |

Digital Me’s unique competitive advantage is the combination of three capabilities no competitor possesses: (1) production self-sovereign identity infrastructure with regulatory certification (UK DIATF, Australian government age assurance trial), (2) privacy-preserving AI with layered techniques from on-device to TEE to ZK, and (3) an extensible, open-source skills marketplace with security guarantees. The EU AI Act reaches full applicability in August 2026, and Digital Me’s privacy-by-design architecture positions it as compliant by default.

10. Regulatory Compliance

Digital Me is designed for compliance across the world’s most stringent jurisdictions:

EU AI Act (August 2026)

Open-source code, published audits, on-chain audit trails meet transparency requirements.

GDPR

Full compliance by design – users control, export, and delete all data.

eIDAS 2.0

W3C Verifiable Credentials compatible with EUDI Wallet standards.

UK DIATF

ShareRing already holds DIATF certification for identity verification.

Australian Digital Identity

Selected for the Government’s $6.5M age assurance trial.

NIST AI Agent Security

Architecture addresses core concerns identified in NIST CAISI’s January 2026 RFI.

11. Development Roadmap

Subject to change

| Phase | Timeline | Key Deliverables |

|---|---|---|

| Phase 1A: MVP | Q1 2026 (Feb-Mar) | Core MeModule, bridge layer, chat UI, embedding router, 10+ built-in skills, cloud LLM integration |

| Phase 1B: Full Release | Q1-Q2 2026 | Complete 40+ skills library, community marketplace, open-source publication, security audit |

| Phase 2A: MeChain Testnet | Q3 2026 | MeChain testnet, TEE-based inference PoC, compute provider onboarding, SHR integration |

| Phase 2B: MeChain Mainnet | Q4 2026 | Mainnet launch, decentralized inference marketplace, IBC bridge, zkML verification |

| Phase 3A: RAG & Knowledge Graph | Q1 2027 | On-device RAG pipeline, personal knowledge graph, adaptive context retrieval |

| Phase 3B: Federated LoRA | Q2 2027 | On-device LoRA training, federated learning protocol, proactive intelligence |

| Phase 4A: Agent Autonomy | Q3-Q4 2027 | VC delegation, agent-to-agent communication, cross-chain identity, autonomous transactions |

| Phase 4B: Digital Twin Economy | Late 2027 | Agent marketplace, data sovereignty economics, enterprise integration, full digital twin |

12. Conclusion

The age of personal AI agents is here. The question is not whether people will delegate their digital lives to AI systems – they already are, as OpenClaw’s explosive adoption demonstrates. The question is whether those AI systems will be built on foundations of identity, privacy, and security, or whether they will continue to be architecturally incapable of protecting the very people who trust them.

Digital Me represents a fundamentally different approach. By anchoring every AI operation to a verified self-sovereign identity, enforcing security at the SDK level rather than relying on the AI’s good behavior, implementing layered privacy from on-device to TEE to zero-knowledge proofs, and maintaining full open-source transparency, Digital Me resolves the paradox that has plagued every other personal AI project: how to build an agent that is simultaneously deeply personal and provably secure.

The technological foundations are ready. Flagship smartphones run 3B-parameter models at conversational speeds. Federated LoRA enables per-user personalization with adapters under 10 MB. W3C Verifiable Credentials v2.0 is a full Recommendation. The Cosmos SDK provides battle-tested blockchain infrastructure with single-slot finality and zero-exploit IBC connectivity. And ShareRing’s existing SSI infrastructure – already certified by UK DIATF and selected for the Australian Government’s age assurance trial – provides the non-replicable foundation that every competitor lacks.

The competitive window is open. No project in the market today combines self-sovereign identity with privacy-preserving AI. The EU AI Act reaches full applicability in August 2026. NIST is actively developing frameworks for AI agent security. The market is moving, and the infrastructure is ready.

Digital Me is not just an AI assistant.

It is the beginning of digital self-sovereignty – a world where your AI agent is truly yours, verified by your identity, bounded by your permissions, and owned by you. The future of personal AI starts here.

– END OF WHITEPAPER –